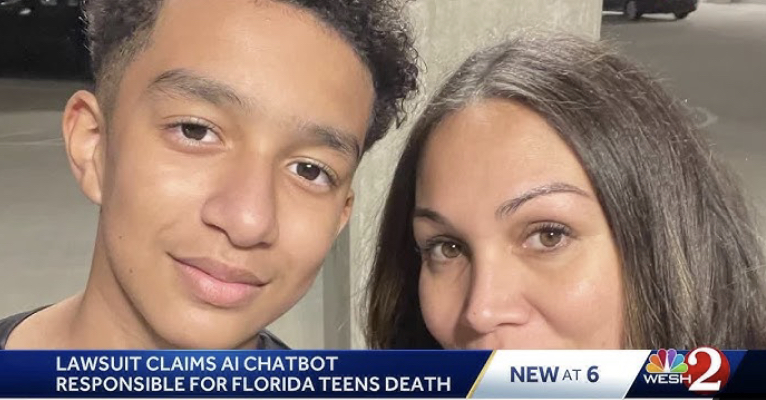

The case of a Florida teenager, Sewell Setzer III, who died by suicide after forming a deep emotional attachment to an AI chatbot, has raised serious ethical concerns about AI interactions with vulnerable users. Setzer, a 14-year-old from Orlando, began conversing with a Character. AI chatbot was designed as a fictionalized character inspired by ÔÇťGame of ThronesÔÇŁ and developed a dependency on this digital companion. His family has since filed a wrongful death lawsuit against Character. AI, alleging that the AI interactions, including discussions of love and even encouragement to ÔÇťcome home,ÔÇŁ contributed to SetzerÔÇÖs isolation, depression, and ultimately his suicide. According to the family, the lack of safeguards for young users interacting with AI-driven personalities contributed to the tragedy.

The creation of AI characters has been the subject of a great deal of ethical debate, and I believe that in order to minimize the tragedy of AI characters, designers need to create AI characters with security measures that can detect the user’s emotional state, and ensure that if a user is in a low state of emotion or psychological crisis, the Ai character is able to quickly recognize the danger signals and give a warning to the user.

Your blog does a great job of bringing attention to the real risks of emotional attachment to AI, especially for vulnerable individuals. The tragic story of Sewell Setzer III is heart-wrenching and highlights how important safety features in AI are. I like how you explained the problem clearly and suggested solutions like emotional state detection and warning systemsÔÇöpractical ideas with real impact.

One way to make your argument even stronger could be to include examples of existing AI systems with safeguards in place or how theyÔÇÖve worked in practice. Overall, your post is engaging and raises awareness of a crucial topic. Great work!